Quantum Computing - Page 2

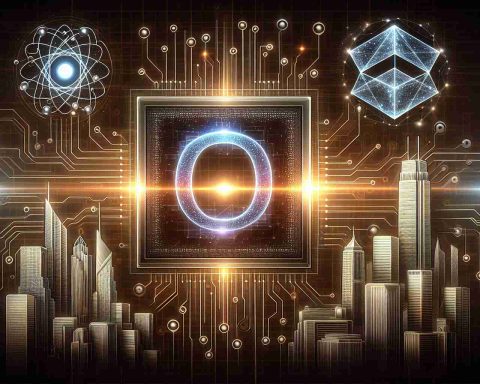

Quantum computing is a type of computing that utilizes the principles of quantum mechanics to process information. Unlike classical computers, which use bits as the smallest unit of data (represented as 0s or 1s), quantum computers use quantum bits, or qubits. Qubits can exist in multiple states simultaneously due to superposition, allowing quantum computers to perform complex calculations at unprecedented speeds.Another key principle of quantum computing is entanglement, where the state of one qubit is dependent on the state of another, regardless of the distance between them. This enables a high level of parallelism and can lead to the solving of certain problems that are intractable for classical computers, such as factoring large integers or simulating quantum systems.Quantum computing has potential applications in various fields, including cryptography, optimization, drug discovery, and material science. However, as of now, practical, large-scale quantum computers are still largely in the experimental stage, with ongoing research aimed at overcoming challenges such as error rates and qubit coherence times.